Regression Techniques

Regression Techniques

Objectives

- Create linear regression models to predict a continuous variable with another continuous variable

- Use multiple linear regression to predict a continuous variable with more than one predictor variable

- Assess regression results with root mean square error (RMSE) and R-squared

- Perform regression diagnostics to assess whether assumptions are met

Overview

Before we investigate machine learning methods, this module will provide some examples of linear regression techniques to predict a continuous variable. Similar to machine learning, linear regression equations are generated by fitting to training samples. Once the model is generated, it can then be used to predict new samples. If more than one independent variable is used to predict the dependent variable, this is known as multiple regression. Since regression techniques are parametric and have assumptions, you will also need to perform diagnostics to make sure that assumptions are not violated.

Regression techniques are heavily used for data analysis; thus, this is a broad topic, which we will not cover in detail. For example, it is possible to use regression to predict categories or probabilities using logistic regression. It is possible to incorporate categorical predictor variables as dummy variables, model non-linear relationships with higher order terms and polynomials, and incorporate interactions. Regression techniques are also used for statistical inference; for example, ANOVA is based on regression. If you have an interest in learning more about regression, there are a variety of resources on this topic. Also, the syntax demonstrated here can be adapted to more complex techniques.

Quick-R provides examples of regression techniques here and regression diagnostics here.

In this example, we will attempt to predict the percentage of a county population that has earned at least a bachelor’s degree using other continuous variables. I obtained these data from the United States Census as estimated data as opposed to survey results. Here is a description of the provided variables. The link at the bottom of the page provides the example data and R Markdown file used to generate this module.

- per_no_hus: percent of households with no father present

- per_with_child_u18: percent of households with at least one child younger than 18

- avg_fam_size: average size of families

- percent_fem_div_15: percent of females over 15 years old that are divorced

- per_25_bach: percent of population over 25 years old with at least a bachelor’s degree

- per_native_born: percent of population born in United States

- per_eng_only: percent of population that only speak English

- per_broadband: percent of households with broadband access

Preparation

Before beginning the analysis, you will need to read in the required packages and data.

library(ggplot2)

library(GGally)

library(dplyr)

library(Metrics)

library(car)

library(gvlma)data <- read.csv("D:/mydata/regression/census_data.csv")Linear Regression

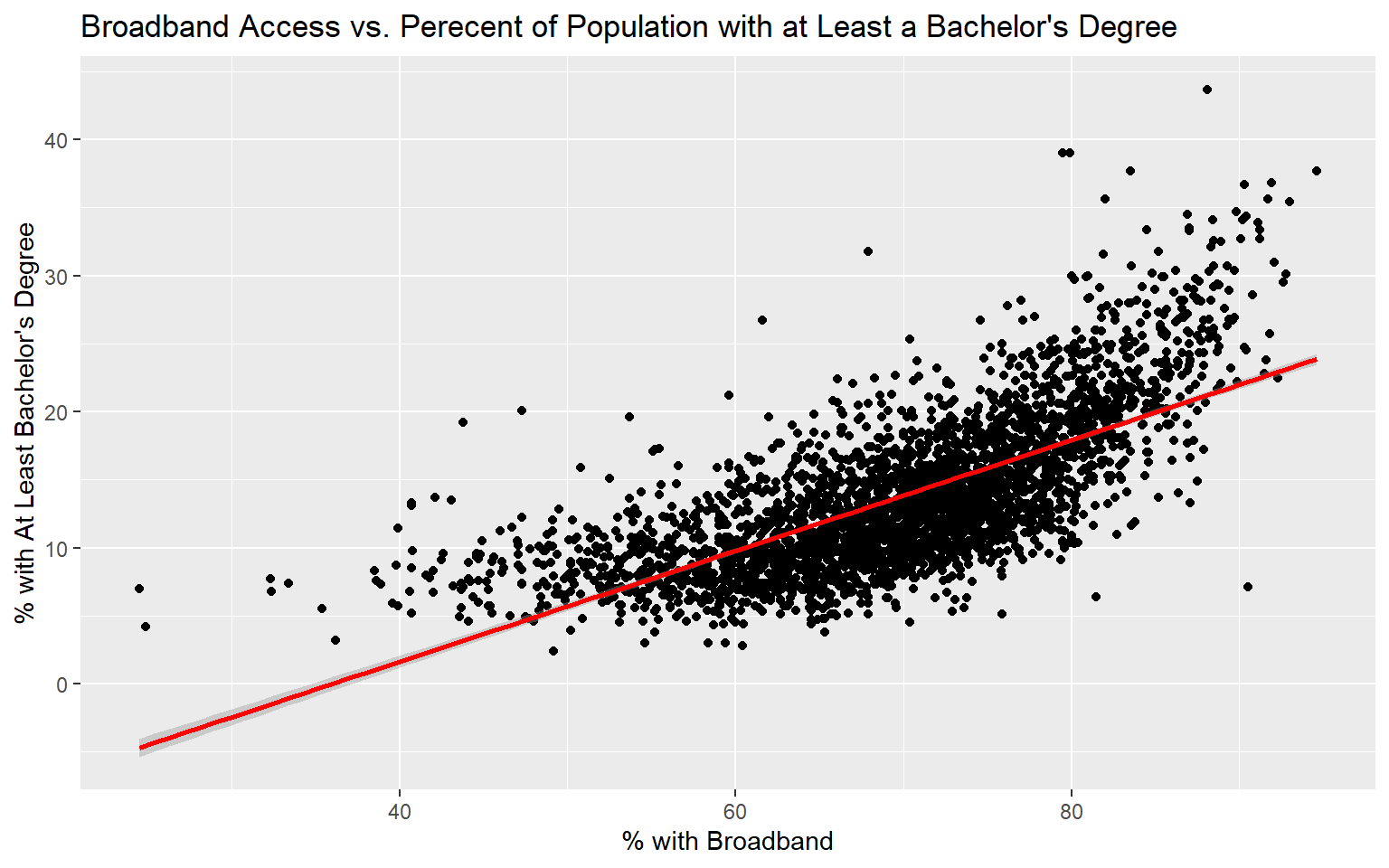

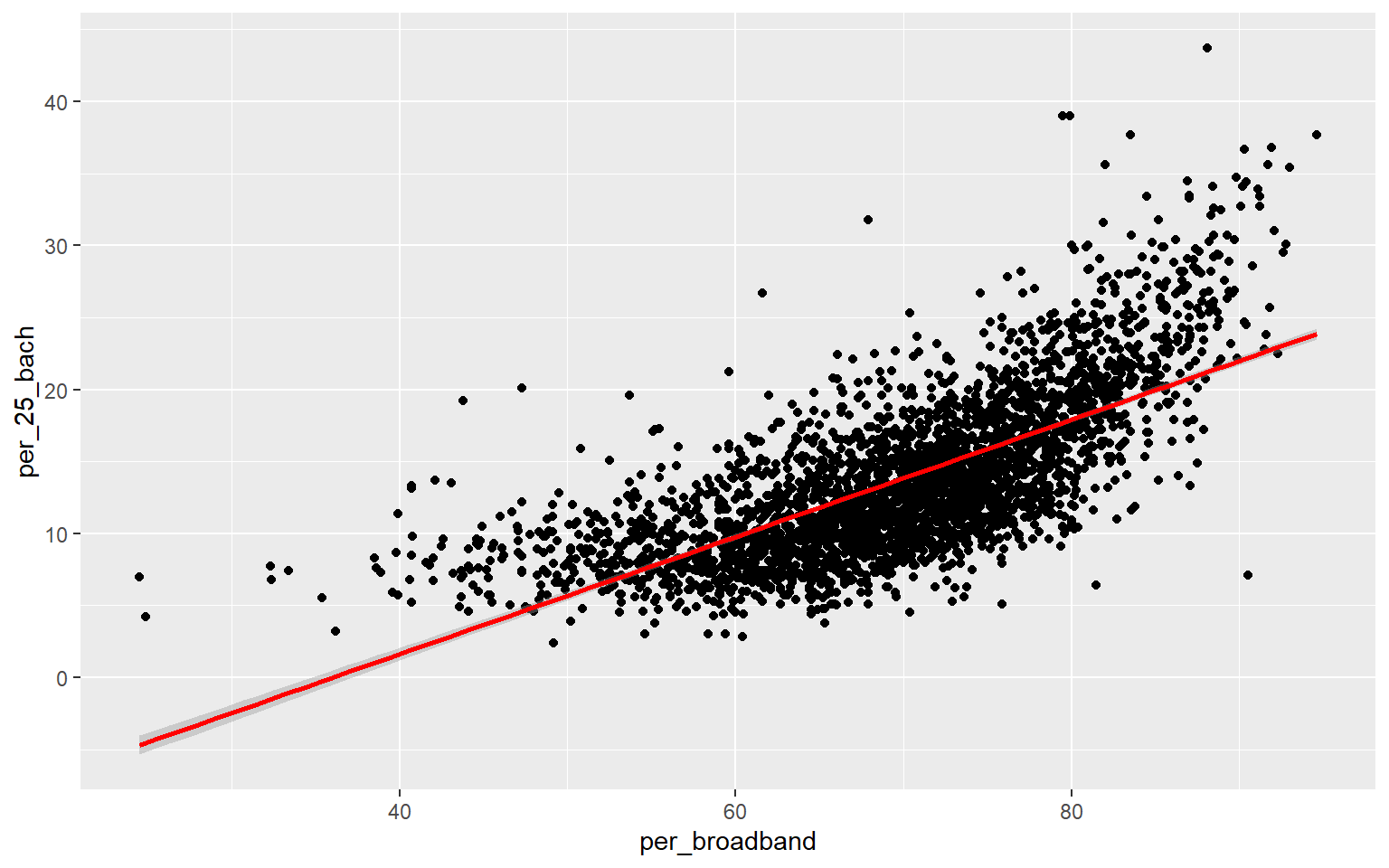

Before I attempt to predict the percent of the county population with at least a bachelor’s degree with all the other provided variables, I will make a prediction using only the percent with broadband access. To visualize the relationship, I first generate a scatter plot using ggplot2. Although not perfect, it does appear that there is a relationship between these two variables. Or, higher percent access to broadband seems to be correlated with secondary degree attainment rate. This could be completely coincidental; correlation is not causation.

ggplot(data, aes(x=per_broadband, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")+

ggtitle("Broadband Access vs. Perecent of Population with at Least a Bachelor's Degree")+

labs(x="% with Broadband", y="% with At Least Bachelor's Degree")

Fitting a linear model in R can be accomplished with the base R lm() function. Here, I am providing a formula. The left-side of the formula will contain the dependent variable, or the variable being predicted, while the right-side will reference one or more predictor variables. You also need to provide a data argument.

Calling summary() on the linear model will print a summary of the results. The fitted model is as follows:

per_25_bach = -14.6 + 0.41*per_broadband

Since the coefficient for the percent broadband variable is positive, this suggests that there is a positive or direct relationship between the two variables, as previously suggested by the graph generated above. The residuals vary from -15.1% to 22.5% with a median of -0.4%. The F-statistic is statistically significant, which suggest that this model is performing better than simply returning the mean value of the data set as the prediction. The R-squared value is 0.50, suggesting that roughly 50% of the variability in percent secondary degree attainment is explained by percent broadband access. In short, there is some correlation between the variables but not enough to make a strong prediction.

m1 <- lm(per_25_bach ~ per_broadband, data=data)

summary(m1)

Call:

lm(formula = per_25_bach ~ per_broadband, data = data)

Residuals:

Min 1Q Median 3Q Max

-15.0993 -2.7609 -0.4354 2.2977 22.4786

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -14.67830 0.51252 -28.64 <2e-16 ***

per_broadband 0.40749 0.00726 56.12 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 3.948 on 3140 degrees of freedom

Multiple R-squared: 0.5008, Adjusted R-squared: 0.5006

F-statistic: 3150 on 1 and 3140 DF, p-value: < 2.2e-16Fitted models and model summaries are stored as list objects. So, you can find specific components of the model or results within the list. In the code block below, I am extracting the R-squared value from the summary list object.

summary(m1)$r.squared

[1] 0.5007875The Metric package can be used to calculate a variety of summary statistics. In the example below, I am using it to obtain the root mean square error (RMSE) of the model. To do so, I must provide the correct values from the original data table and the predicted or fitted values from the model list object. RMSE is reported in the units of the dependent variable, in this case percent.

rmse(data$per_25_bach, m1$fitted.values)

[1] 3.946617You can also calculate RMSE manually as demonstrated below. This will yield the same answer as the rmse() function.

sqrt(mean((data$per_25_bach - m1$fitted.values)^2))

[1] 3.946617Multiple Linear Regression

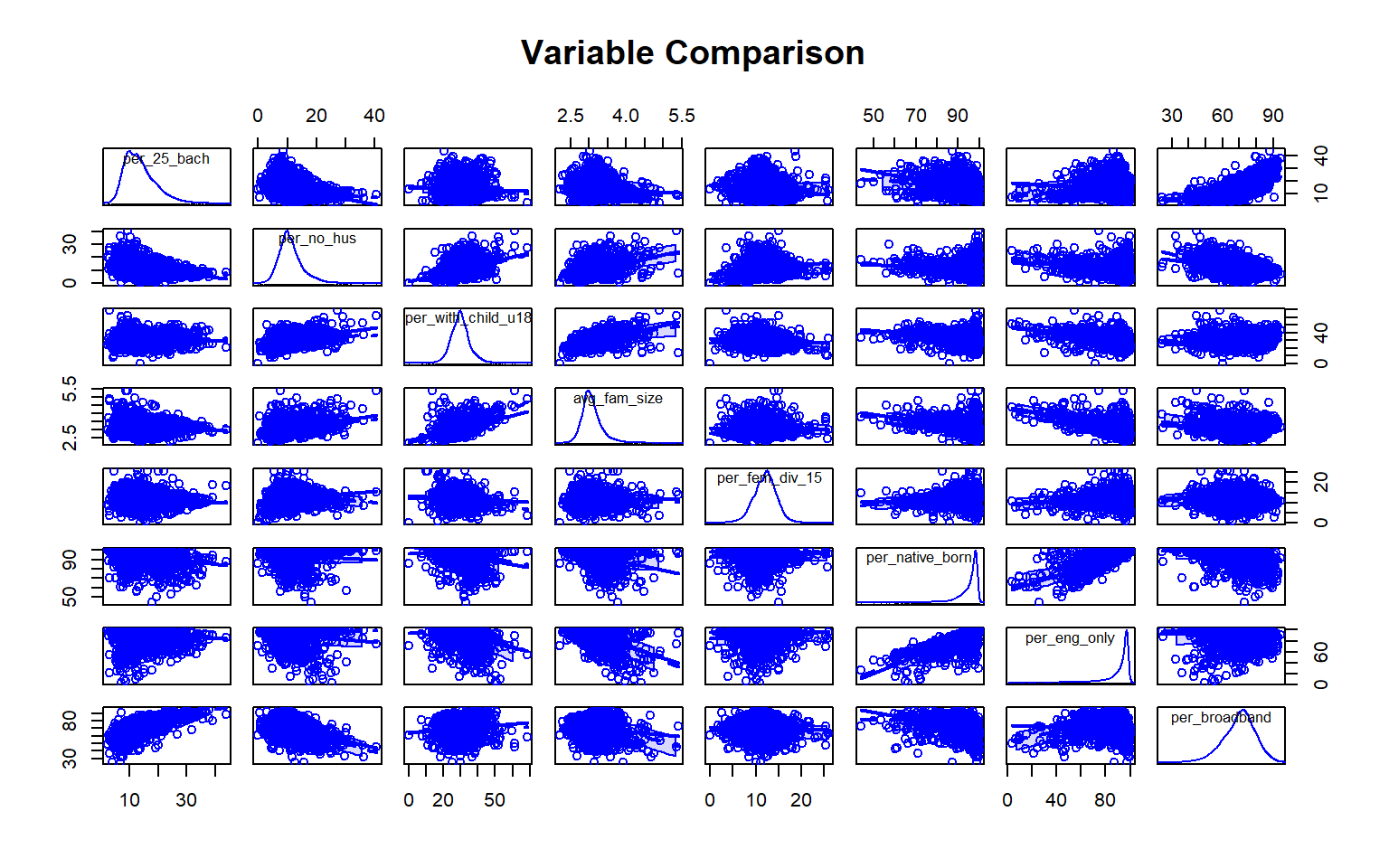

We will now try to predict the percent of the county population with at least a bachelor’s degree using all other variables in the Census data set. First, I generate a scatter plot matrix using the car package to visualize the distribution of the variables and the correlation between pairs. Note also that the percent of grandparents who are caretakers for at least one grandchild variable read in as a factor, so I convert it to a numeric variable before using it.

data$per_gp_gc <- as.numeric(data$per_gp_gc)

scatterplotMatrix(~per_25_bach + per_no_hus + per_with_child_u18 + avg_fam_size + per_fem_div_15 + per_native_born + per_eng_only + per_broadband, data=data, main="Variable Comparison")

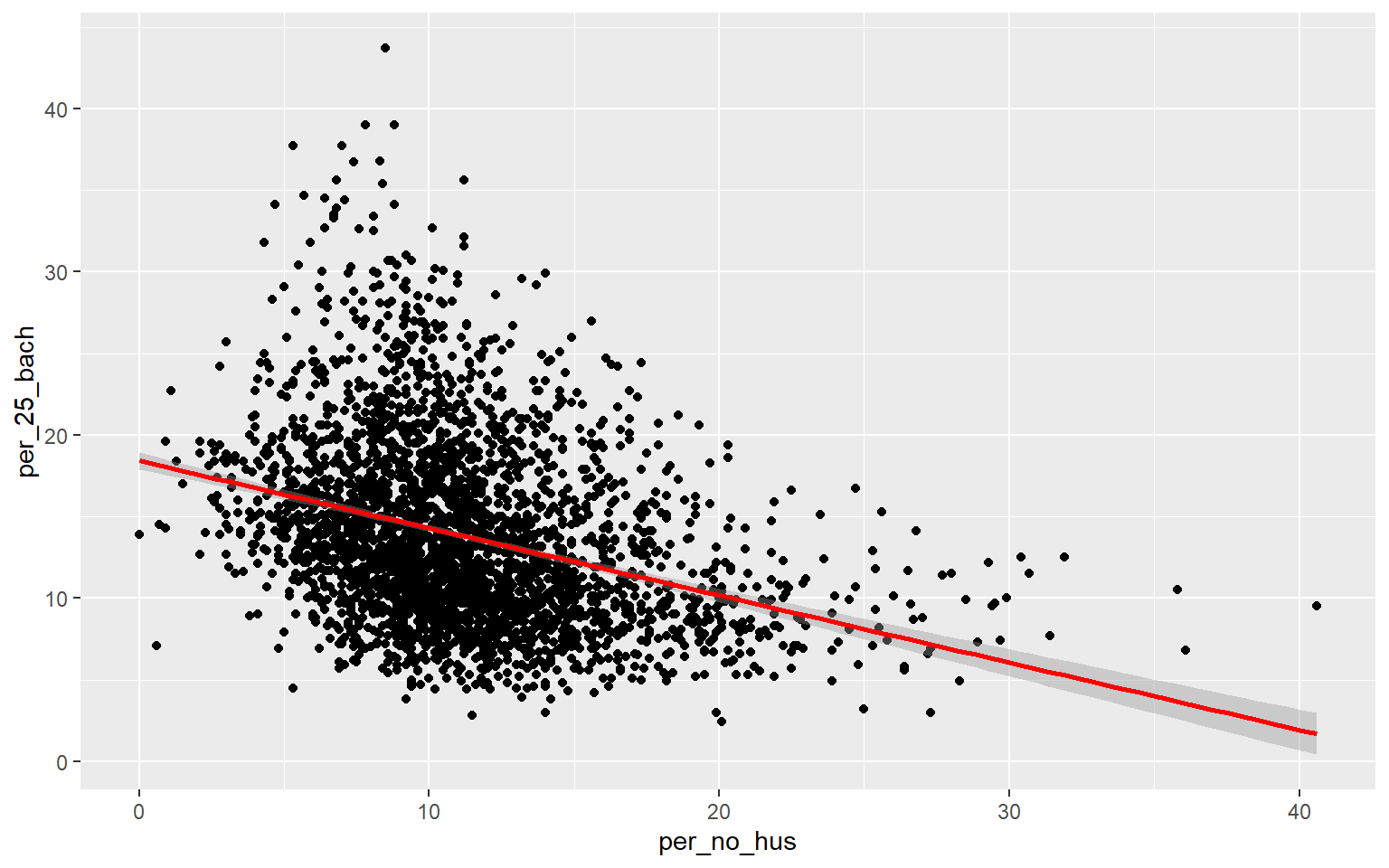

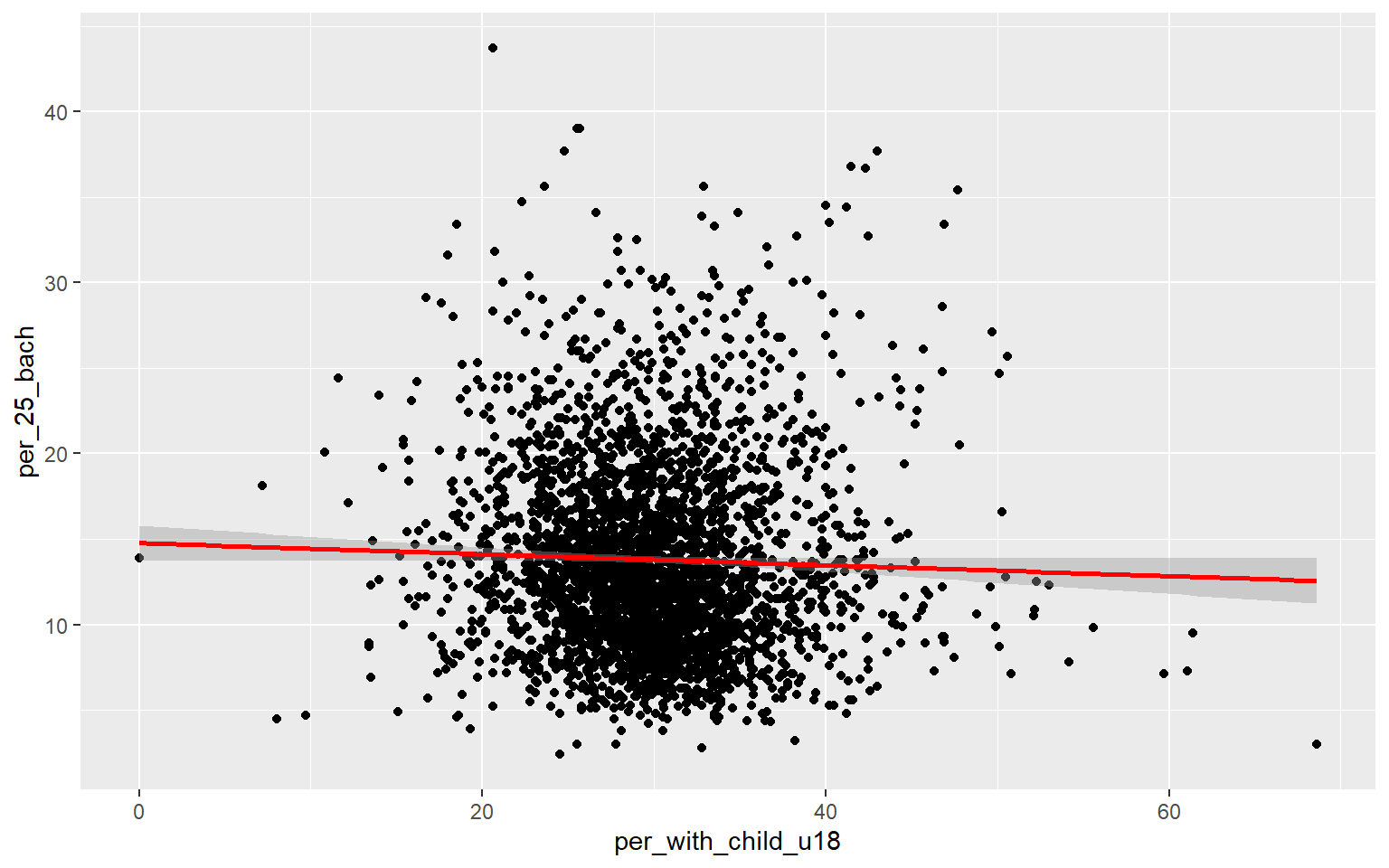

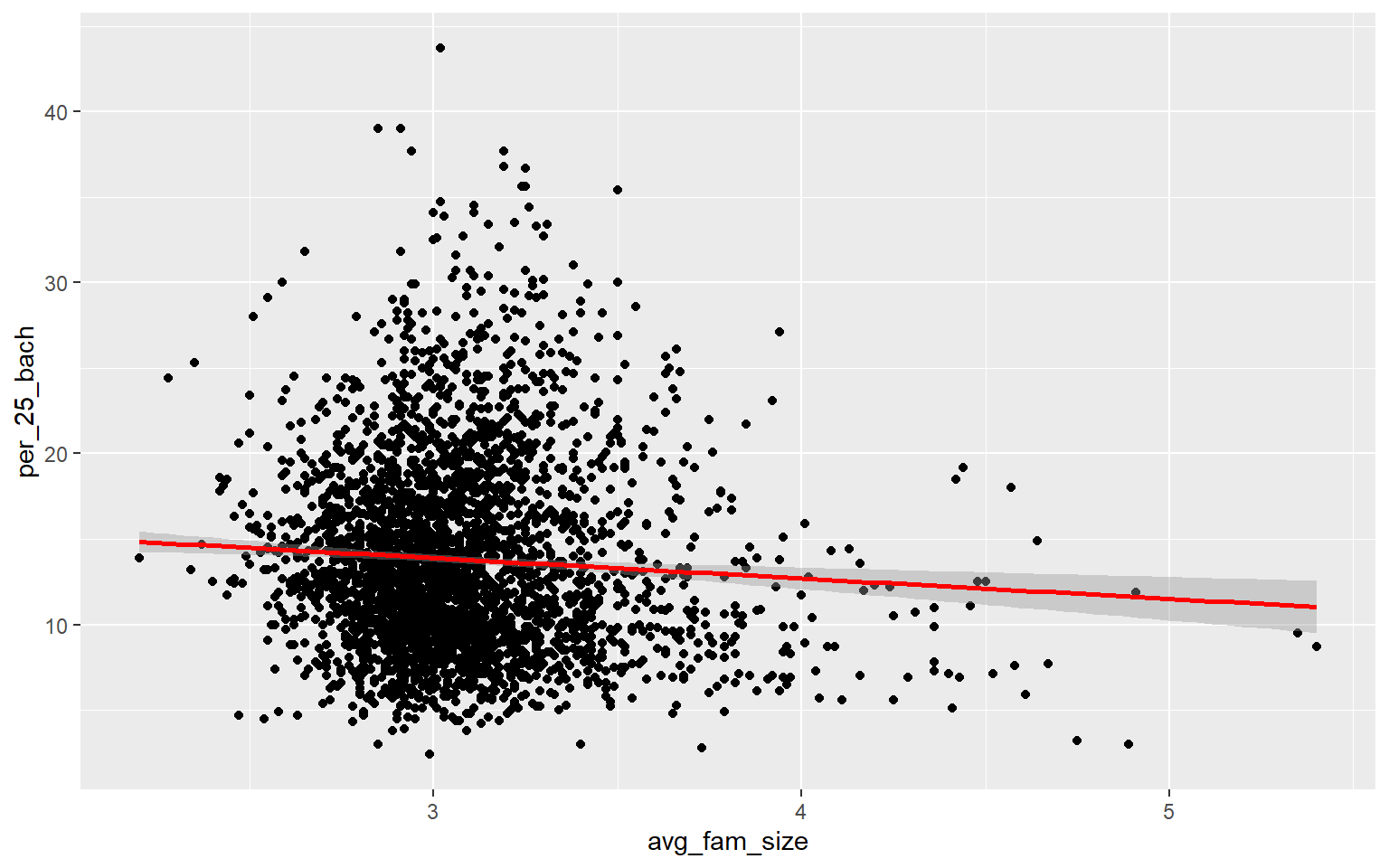

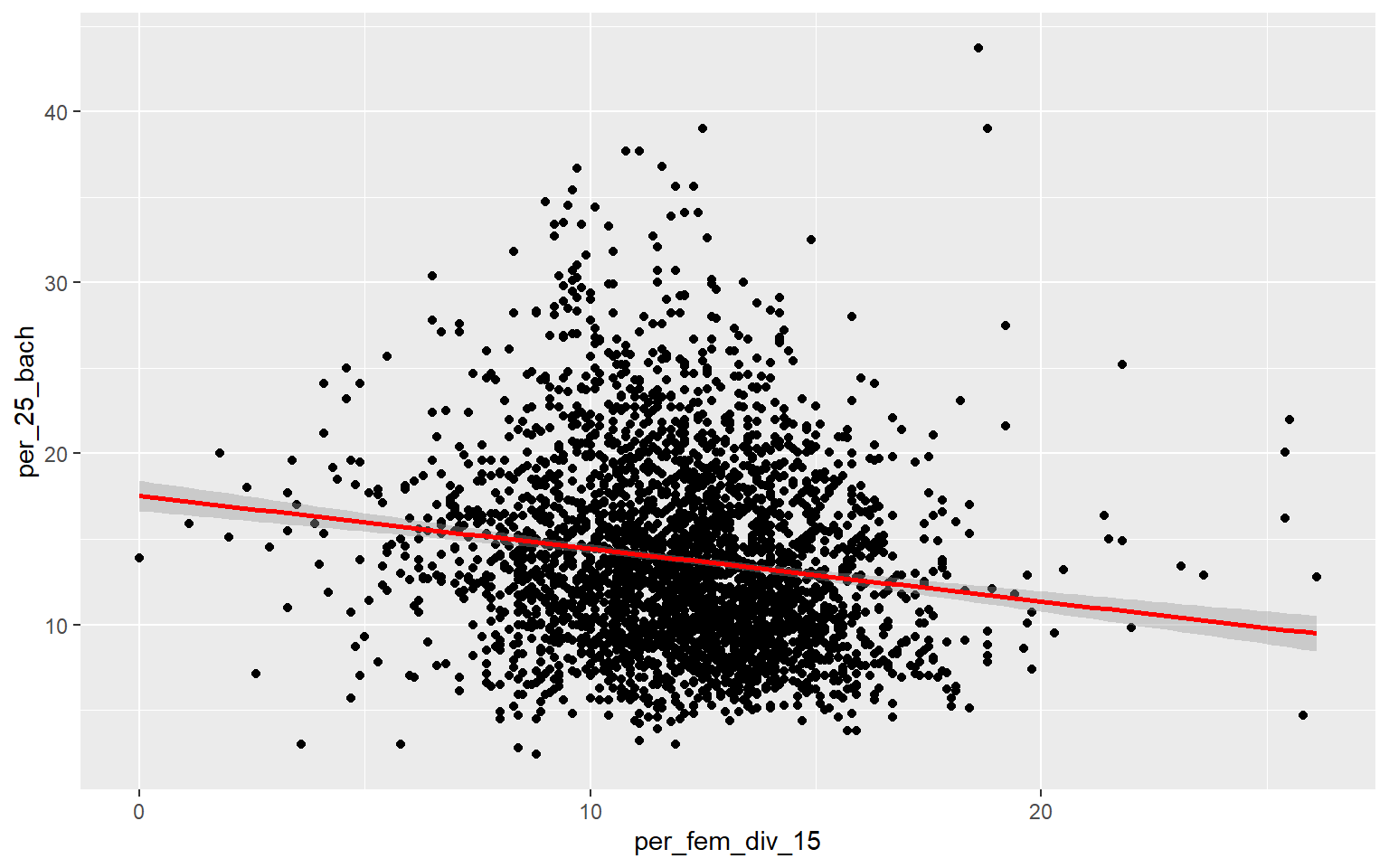

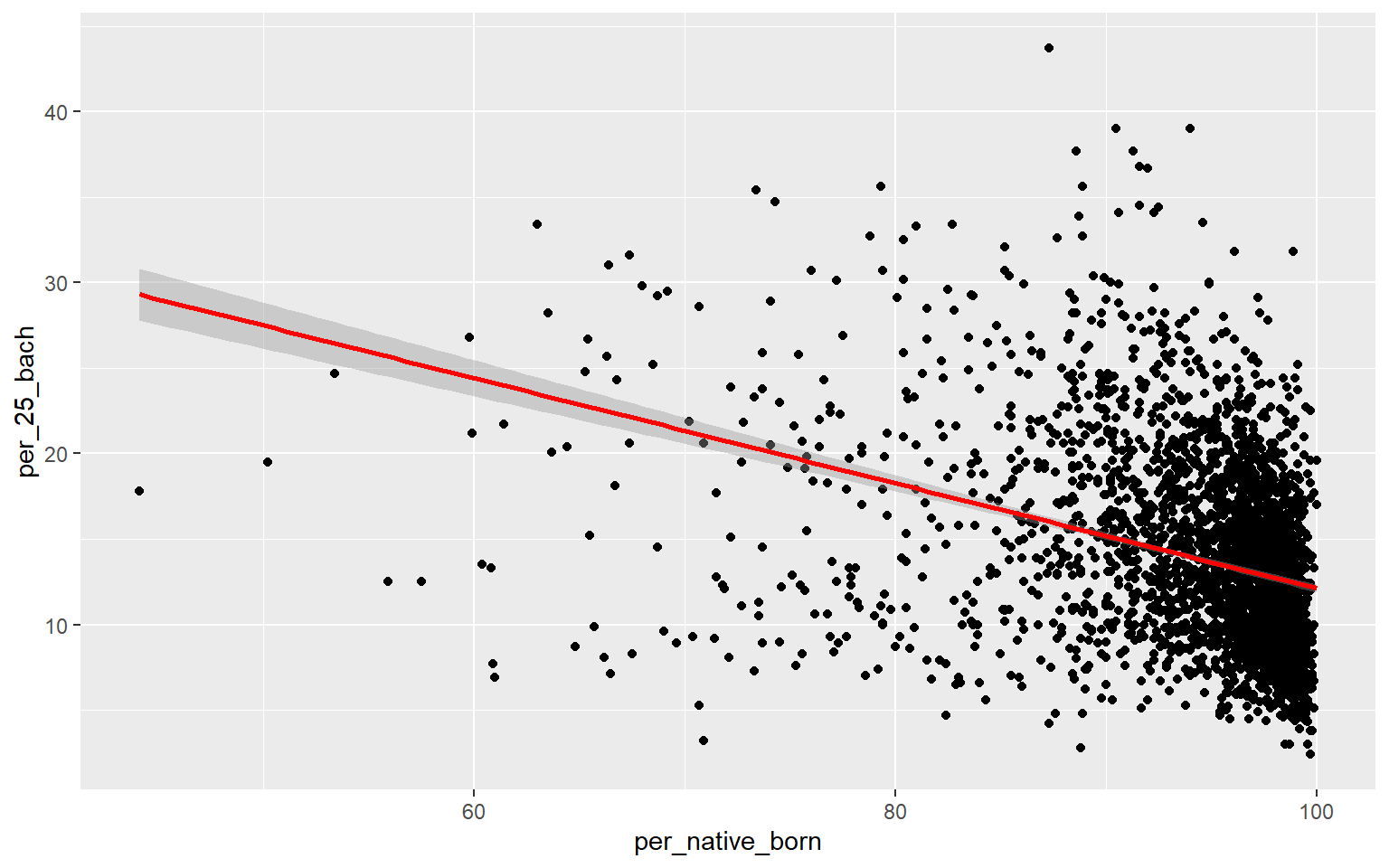

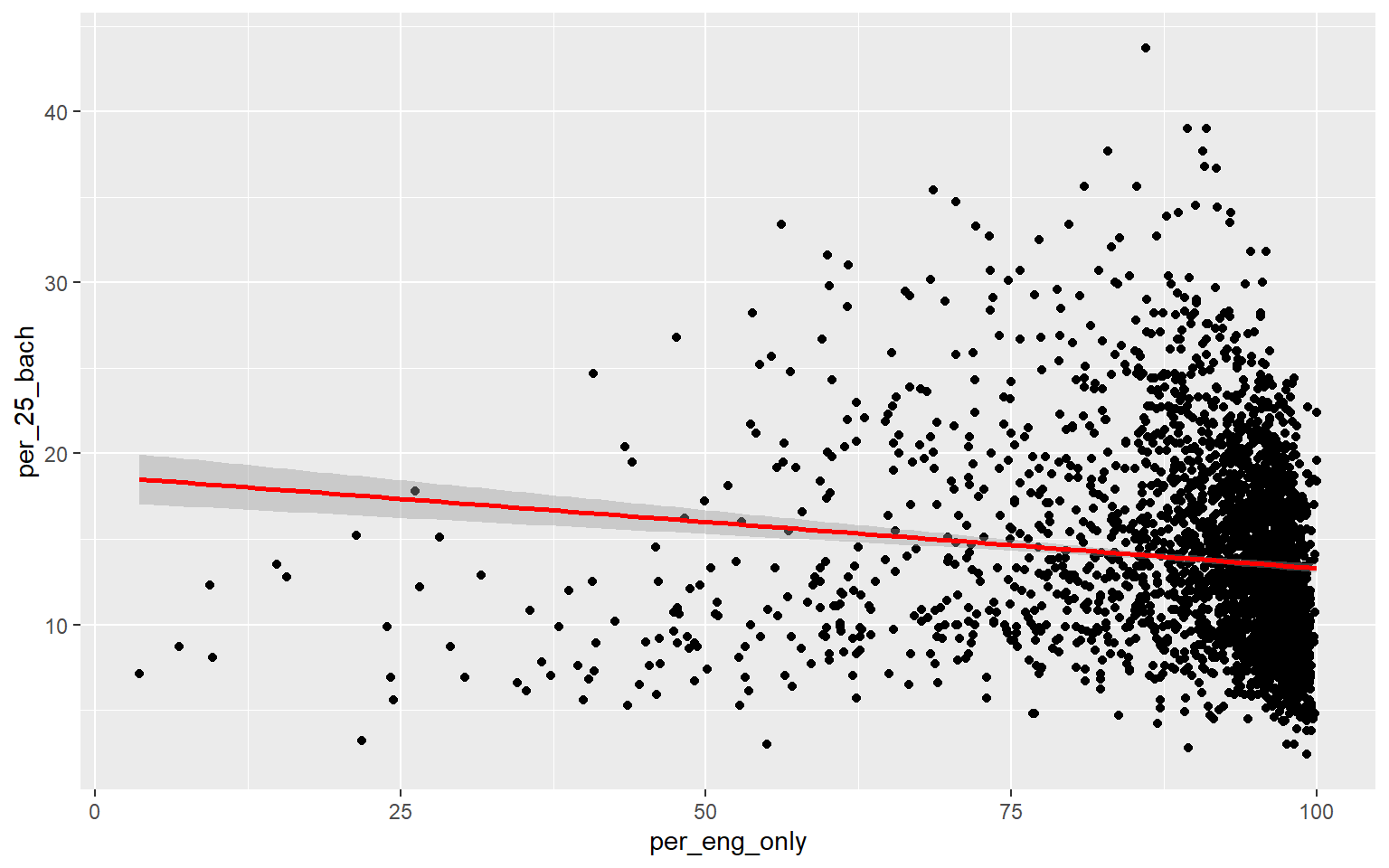

As an alternative to a scatter plot matrix, you could also just create separate scatter plots to compare the dependent variable with each independent variable separately. Take some time to analyze the patterns and correlations. What variables do you think will be most predictive?

ggplot(data, aes(x=per_no_hus, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

ggplot(data, aes(x=per_with_child_u18, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

ggplot(data, aes(x=avg_fam_size, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

ggplot(data, aes(x=per_fem_div_15, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

ggplot(data, aes(x=per_native_born, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

ggplot(data, aes(x=per_eng_only, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

ggplot(data, aes(x=per_broadband, y=per_25_bach))+

geom_point()+

geom_smooth(method="lm", col="red")

Generating a multiple linear regression model is the same as producing a linear regression model using only one independent variable accept that multiple variables will now be provided on the right-side of the equation. Once the model is generated, the summary() function can be used to explore the results. When more than one predictor variable is used, adjusted R-squared should be used for assessment as opposed to R-squared. The proportion of variance explained increased from 0.50 to 0.56, suggesting that this multiple regression model is performing better than the model created above using only percent broadband access. The F-statistic is also statistically significant, suggesting that the model is better than simply predicting using only the mean value of the dependent variable. When using multiple regression, you should interpret coefficients with caution as they don’t take into account variable correlation and can, thus, be misleading.

m2 <- lm(per_25_bach ~ per_no_hus + per_with_child_u18 + avg_fam_size + per_fem_div_15 + per_native_born + per_eng_only + per_broadband, data = data)

summary(m2)

Call:

lm(formula = per_25_bach ~ per_no_hus + per_with_child_u18 +

avg_fam_size + per_fem_div_15 + per_native_born + per_eng_only +

per_broadband, data = data)

Residuals:

Min 1Q Median 3Q Max

-19.1950 -2.4225 -0.3179 2.0754 22.0502

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.041563 2.100332 1.924 0.054414 .

per_no_hus 0.021519 0.020862 1.032 0.302375

per_with_child_u18 -0.199677 0.015448 -12.926 < 2e-16 ***

avg_fam_size 1.678205 0.305947 5.485 4.46e-08 ***

per_fem_div_15 -0.274503 0.026190 -10.481 < 2e-16 ***

per_native_born -0.185967 0.021397 -8.691 < 2e-16 ***

per_eng_only 0.040357 0.011447 3.526 0.000429 ***

per_broadband 0.394104 0.009165 43.003 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 3.715 on 3134 degrees of freedom

Multiple R-squared: 0.5587, Adjusted R-squared: 0.5577

F-statistic: 566.8 on 7 and 3134 DF, p-value: < 2.2e-16The RMSE decreased from 3.95% to 3.69%, again suggesting improvement in the model performance.

rmse(data$per_25_bach, m2$fitted.values)

[1] 3.710757Regression Diagnostics

Once a linear regression model is produced, your work is not done. Since linear regression is a parametric method, you must test to make sure assumptions are not violated. If they are violated, you will need to correct the issue; for example, you may remove variables, transform variables, or use a different modeling method more appropriate for your data.

Here are the assumptions of linear regression:

- Residuals are normally distributed

- No patterns in the residuals relative to values of the dependent variable (homoscedasticity)

- Linear relationship exists between the dependent variable and each independent variable

- No or little multicollinearity between independent variables

- Samples are independent

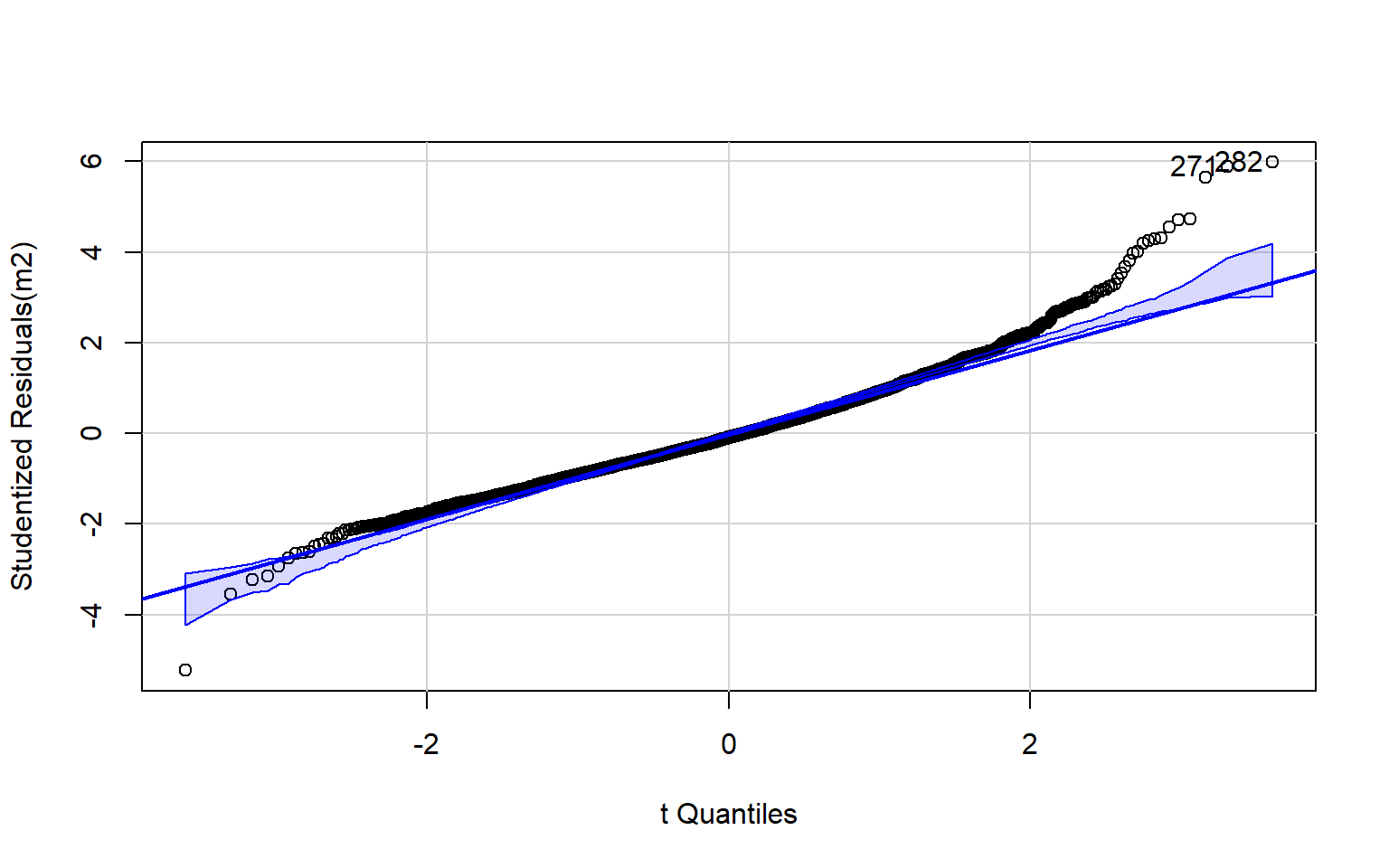

Normality of the residuals can be assessed using a normal Q-Q plot. This plot can be interpreted as follows:

- On line = normal

- Concave = skewed to the right

- Convex = skewed to the left

- High/low off line = kurtosis

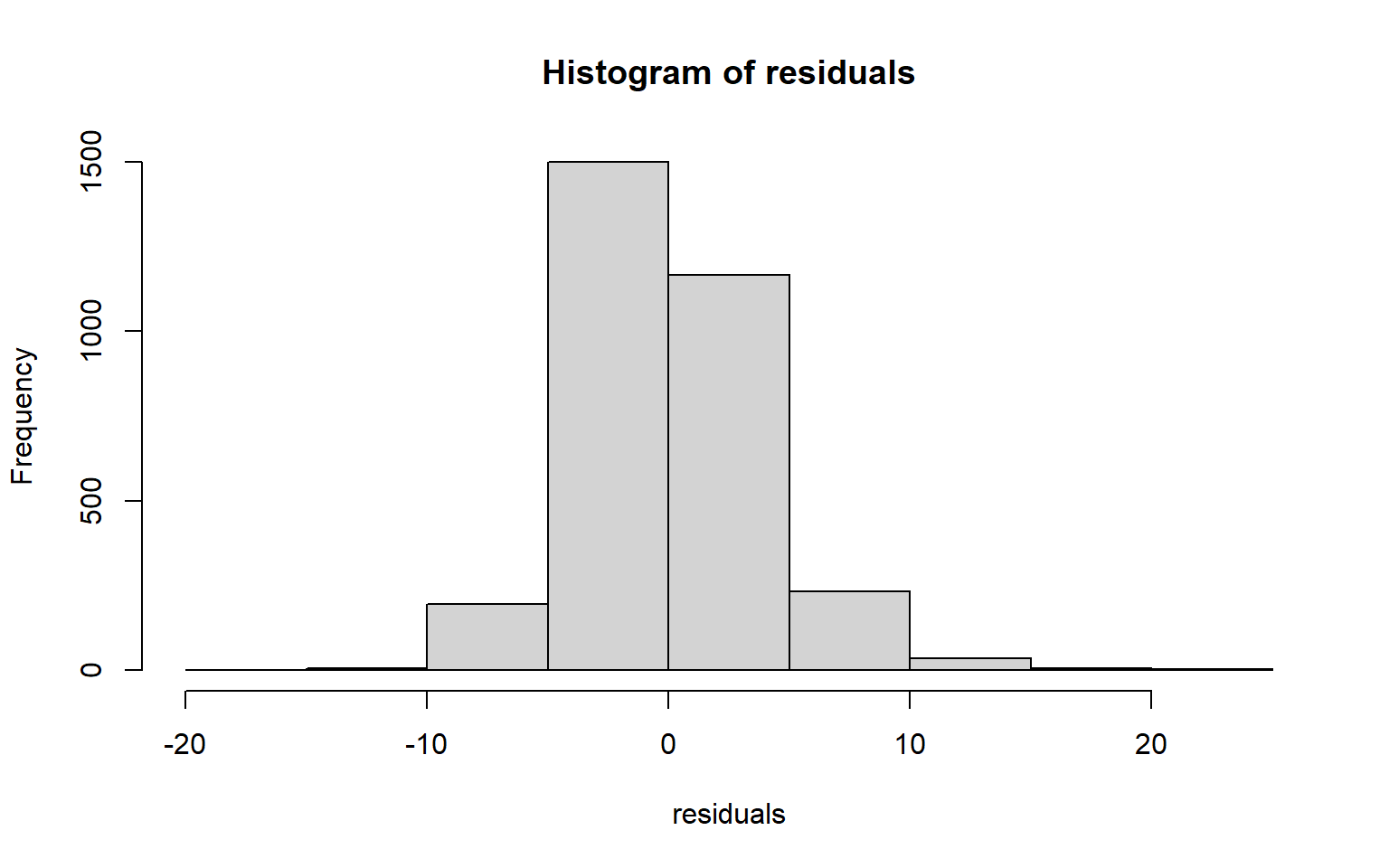

The code block below generates a Q-Q plot using the qqPlot() function from the car package. I have also included a simple histogram created with base R. Generally, this suggests that there may be some kurtosis issues since high and low values diverge from the line.

residuals <- data$per_25_bach - m2$fitted.values

hist(residuals)

qqPlot(m2)

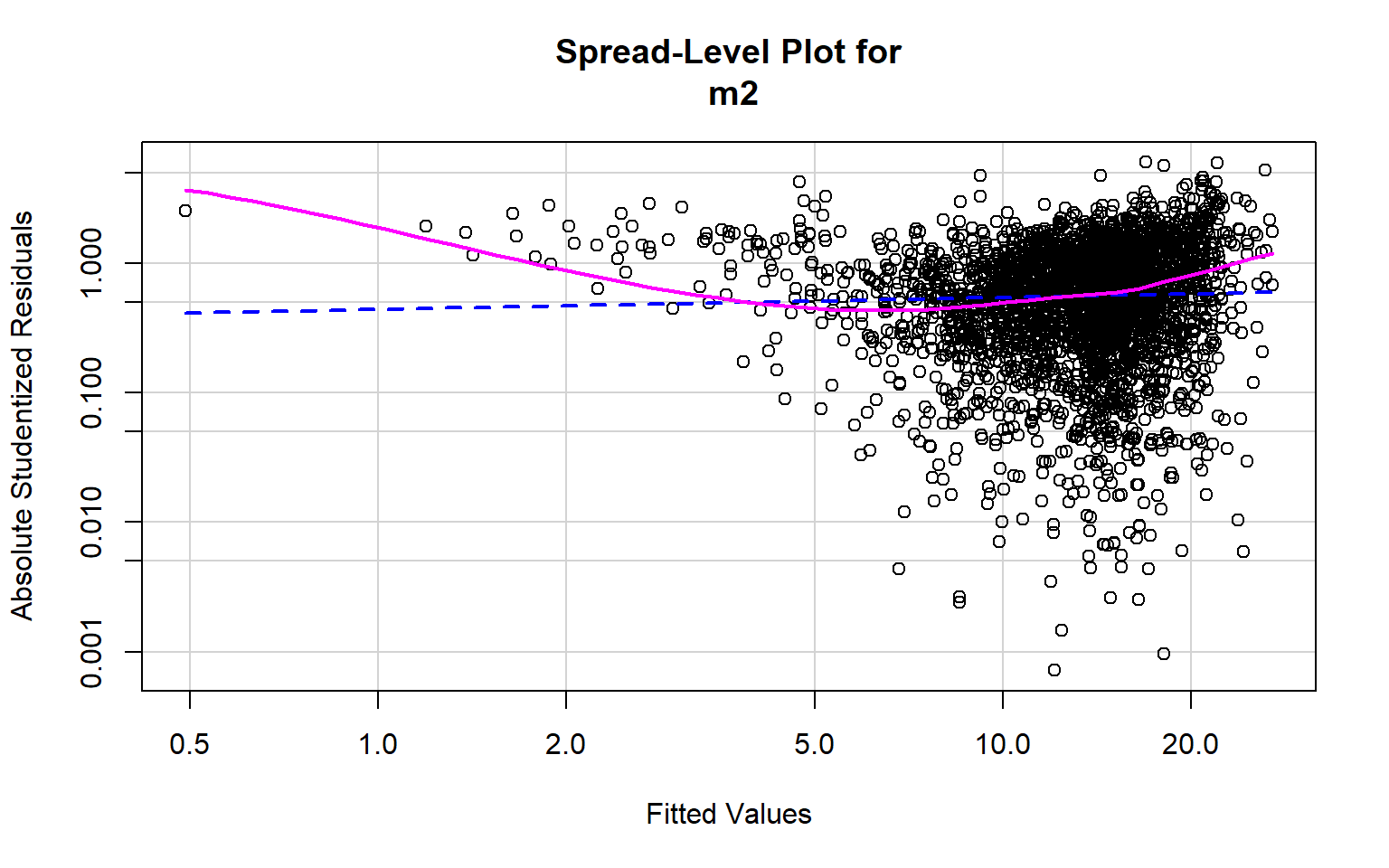

[1] 271 282Optimally, the variability in the residuals should show no pattern when plotted against the values of the dependent variable. Or, the model should not do a better or worse job at predicting high, low, or specific ranges of values of the dependent variable. This is the concept of homoscedasticity. This can be assessed using spread-level plots. In this plot, the residuals are plotted against the fitted values, and no pattern should be observed. The Score Test For Non-Constant Error Variance provides a statistical test of homoscedasticity and can be calculated in R using the ncvTest() function from the car package. In this case I obtain a statistically significant result, which suggests issues in homogeneity of variance. The test also provides a suggested power by which to transform the data.

ncvTest(m2)

Non-constant Variance Score Test

Variance formula: ~ fitted.values

Chisquare = 119.7646, Df = 1, p = < 2.22e-16

spreadLevelPlot(m2)

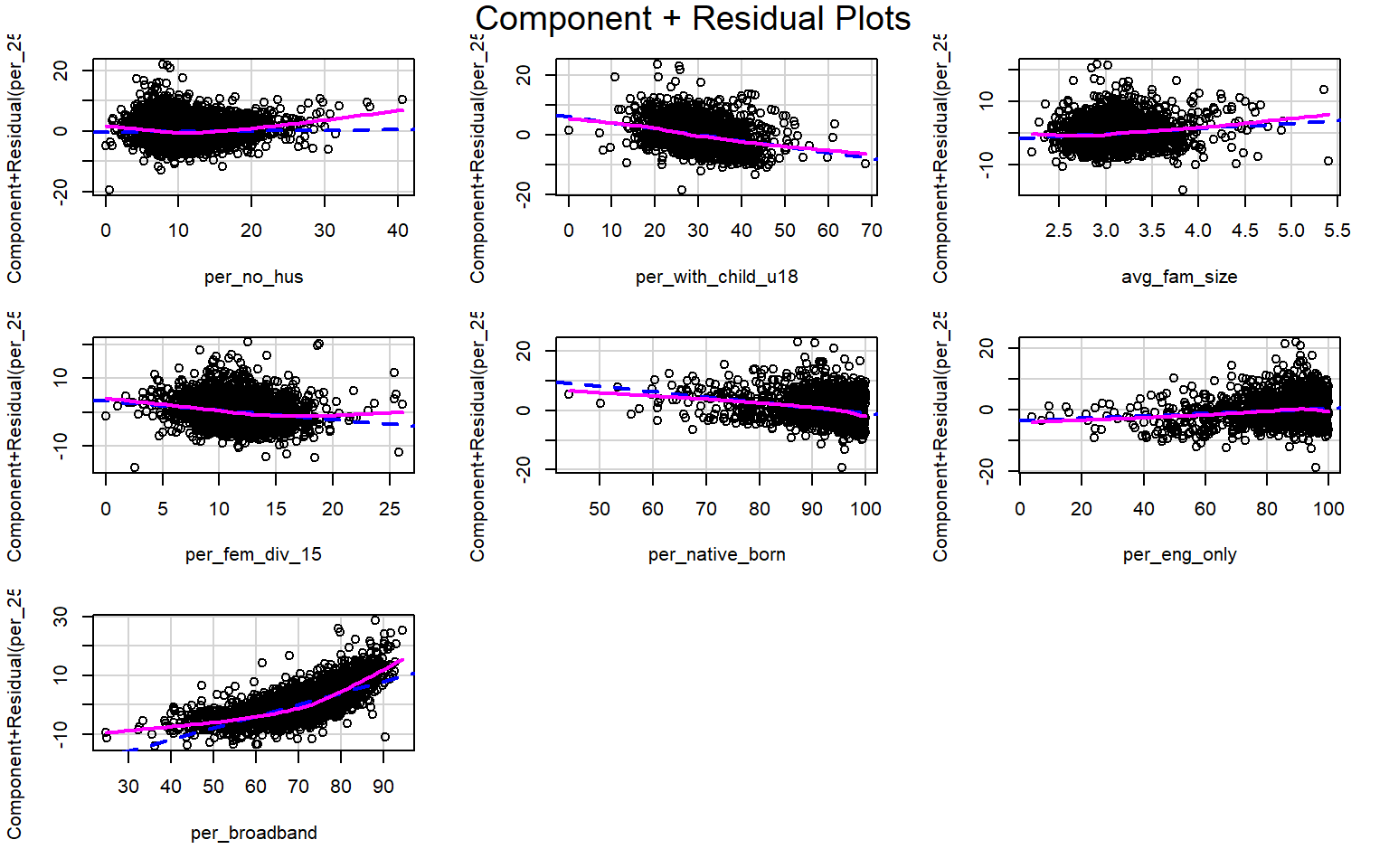

Suggested power transformation: 0.9086395 Component+Residual (Partial Residual) Plots are used to visually assess whether a linear relationship exists between the dependent variable and each independent variable. The trend should be approximated with a line. Curves and other non-linear patterns suggest that the relationship between the variables may not be linear. As an example, the relationship with percent broadband may be better modeled using a function other than a linear one. To make the relationship more linear, a data transformation could be applied.

crPlots(m2)

Multicollinearity between predictor variables can be assessed using the variable inflation factor (VIF). The square root of this value should not be greater than 2. This measure suggests issues with the percent native born and percent English speaking only variables. It would make sense that these variables are correlated, so it might be best to remove one of them from the model. The vif() function is provided by the car package.

vif(m2)

per_no_hus per_with_child_u18 avg_fam_size per_fem_div_15

1.849388 1.802740 1.927171 1.122510

per_native_born per_eng_only per_broadband

3.906954 4.086862 1.798807

sqrt(vif(m2)) > 2 # problem?

per_no_hus per_with_child_u18 avg_fam_size per_fem_div_15

FALSE FALSE FALSE FALSE

per_native_born per_eng_only per_broadband

FALSE TRUE FALSE The gvlma package provides the gvlma() function to test all the assumptions at once. Here are brief explanations of the components of this test.

- Global Stat: combination of all tests, if any component is not satisfied this test will not be satisfied

- Skewness: assess skewness of the residuals relative to a normal distribution

- Kurtosis: assess kurtosis of residuals relative to a normal distribution

- Link Function: assess if linear relationship exists between the dependent variable and all independent variables

- Heteroscedasticity: assess for homoscedasticity

According to this test, the only assumption that is met is homoscedasticity, which was actually found to be an issue in the test performed above. So, this regression analysis is flawed and would need to be augmented to obtain usable results. We would need to experiment with variable transformations and consider dropping some variables. If these augmentations are not successful in satisfying the assumptions, then we may need to use a method other than linear regression.

gvmodel <- gvlma(m2)

summary(gvmodel)

Call:

lm(formula = per_25_bach ~ per_no_hus + per_with_child_u18 +

avg_fam_size + per_fem_div_15 + per_native_born + per_eng_only +

per_broadband, data = data)

Residuals:

Min 1Q Median 3Q Max

-19.1950 -2.4225 -0.3179 2.0754 22.0502

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.041563 2.100332 1.924 0.054414 .

per_no_hus 0.021519 0.020862 1.032 0.302375

per_with_child_u18 -0.199677 0.015448 -12.926 < 2e-16 ***

avg_fam_size 1.678205 0.305947 5.485 4.46e-08 ***

per_fem_div_15 -0.274503 0.026190 -10.481 < 2e-16 ***

per_native_born -0.185967 0.021397 -8.691 < 2e-16 ***

per_eng_only 0.040357 0.011447 3.526 0.000429 ***

per_broadband 0.394104 0.009165 43.003 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 3.715 on 3134 degrees of freedom

Multiple R-squared: 0.5587, Adjusted R-squared: 0.5577

F-statistic: 566.8 on 7 and 3134 DF, p-value: < 2.2e-16

ASSESSMENT OF THE LINEAR MODEL ASSUMPTIONS

USING THE GLOBAL TEST ON 4 DEGREES-OF-FREEDOM:

Level of Significance = 0.05

Call:

gvlma(x = m2)

Value p-value Decision

Global Stat 1473.592 0.000000 Assumptions NOT satisfied!

Skewness 270.451 0.000000 Assumptions NOT satisfied!

Kurtosis 656.523 0.000000 Assumptions NOT satisfied!

Link Function 539.511 0.000000 Assumptions NOT satisfied!

Heteroscedasticity 7.107 0.007677 Assumptions NOT satisfied!Regression techniques are also generally susceptible to the impact of outliers. The outlierTest() function from the car package provides an assessment for the presence of outliers. Using this test, eight records are flagged as outliers. It might be worth experimenting with removing them from the data set to see if this improves the model and/or helps to satisfy the assumptions.

outlierTest(m2)

rstudent unadjusted p-value Bonferroni p

282 5.977347 2.5234e-09 7.9284e-06

271 5.878292 4.5812e-09 1.4394e-05

255 5.647191 1.7767e-08 5.5823e-05

2798 -5.225413 1.8515e-07 5.8173e-04

921 4.732110 2.3204e-06 7.2906e-03

302 4.716742 2.5013e-06 7.8590e-03

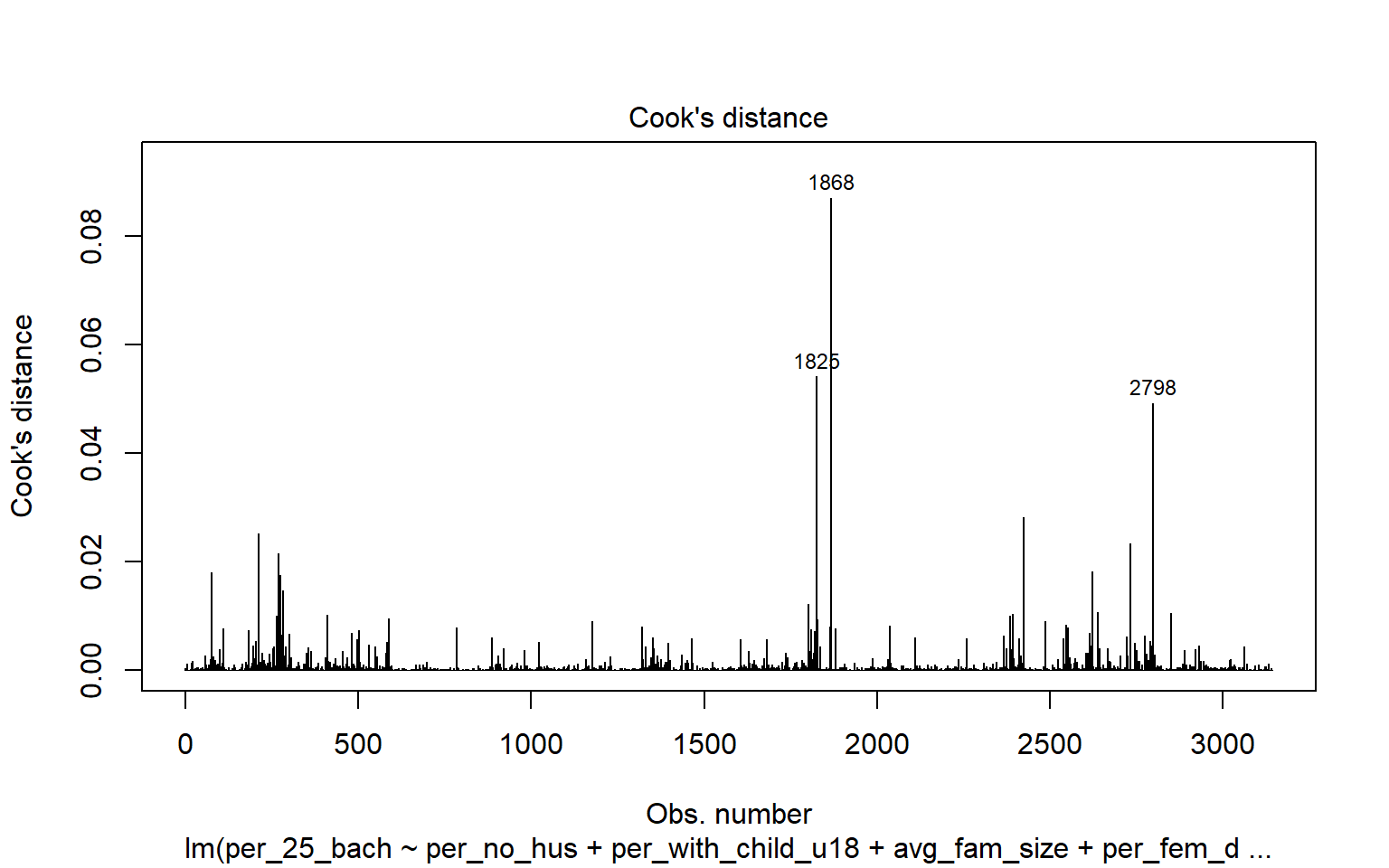

3062 4.548528 5.6072e-06 1.7618e-02Leverage samples are those that have an odd or different combination of independent variable values and, thus, can have a large or disproportionate impact on the model. High leverage points can be assessed using the Cook’s Distance, as shown graphically below. Samples 499, 1042, and 1857 are identified as leverage samples that are having a large impact on the model.

cutoff <- 4/(6-length(m2$coefficients)-2)

plot(m2, which=4, cook.levels=cutoff)

abline(h=cutoff, lty=2, col="red")

Concluding Remarks

The example used here was not optimal for linear regression as many of the model assumptions were not met. So, this problem might be better tackled using a different method, such as machine learning. However, regression can be a powerful and interpretable technique to investigate many problems.